Iguazio’s Platform Scales NVIDIA GPU-Accelerated Deployments

GTC, SAN JOSE (MAR 19, 2019)

Samsung SDS Integrates Serverless and Big Data to Automate Machine Learning Application Scaling and Productization

Iguazio, provider of the high-performance platform for serverless and machine learning applications, today unveiled native integration with NVIDIAⓇ GPUs to eliminate data bottlenecks, provide greater scalability and shorten time to production. Iguazio’s platform powers machine learning and data science over Kubernetes, enabling automatic scaling to multiple NVIDIA GPU servers and rapid processing of hundreds of terabytes of data.

Iguazio’s data science platform provides:

- Serverless functions that run data-intensive applications on NVIDIA GPU-enabled servers.

- Integration of its database with RAPIDS, NVIDIA’s open-source machine learning libraries, for faster and scalable data processing.

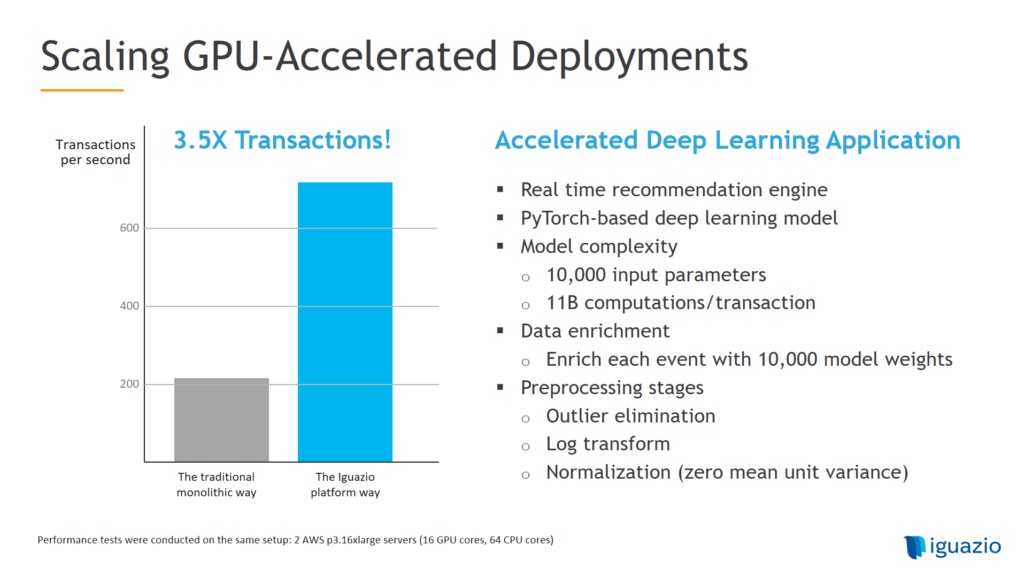

The use of Iguazio’s serverless functions (Nuclio) improves GPU utilization and sharing, resulting in almost four times faster application performance when compared to the use of GPUs within monolithic architectures. Nuclio is fifty times faster than serverless solutions that do not offer GPU support, such as Amazon’s Lambda. Serverless and Kubernetes target key challenges in data science: they simplify operationalization, eliminate manual devops processes and cut time to market.

Samsung SDS will use Iguazio to speed up machine learning applications and leverage the automatic scaling of Iguazio’s serverless framework to increase efficiency and sharing. Samsung SDS announced its investment in Iguazio on March 6th. The company has accelerated its pipeline with Iguazio to streamline the delivery of intelligent applications, analyzing models built directly in its production environment and generating predictions.

“The integration of Iguazio with NVIDIA RAPIDS provides a breakthrough in performance and scalability for data analysis and a broad set of machine learning algorithms,” said Iguazio CTO Yaron Haviv. “Our platform is already powering a collaborative environment and driving cross-team productivity for the processing of vast amounts of data and parallel computing.”

“The RAPIDS suite of open-source libraries enables scaling of GPU-accelerated data processing and machine learning to multi-node, multi-GPU deployments,” said Jeffrey Tseng, Director of Product, AI Infrastructure at NVIDIA. “Iguazio complements the compute power of GPUs by directly connecting GPUs to large-scale, shared data infrastructure.”

About Iguazio

Source:

https://www.iguazio.com/press-release/iguazio-platform-scales-nvidia-gpu-accelerated-deployments/